Recomendaciones y limitaciones para la creación de ranuras para Agentes Virtuales

Cuando active el Agente Virtual, podrá utilizarlo para configurar ranuras con IA. Antes de configurar sus ranuras y tipos de ranuras con el Agente Virtual, revise las limitaciones, consideraciones y consejos que los desarrolladores de Genesys recomiendan para las ranuras de modelos de lenguaje grandes (LLM). La siguiente tabla define los tipos de ranura disponibles con el Agente Virtual.

| Tipo de ranura | Descripción | Ejemplos de |

|---|---|---|

| Secuencia numérica |

Secuencias numéricas proporcionadas por los participantes del bot con una longitud fija. |

|

| Combinación de letras y números |

Secuencias alfanuméricas proporcionadas por los participantes del bot con una longitud fija. |

|

| Forma libre |

Una secuencia de forma libre proporcionada por los participantes del bot con una descripción dada. |

|

Las siguientes secciones describen las limitaciones de las ranuras, información sobre cómo estas ranuras gestionan la confirmación y negación explícitas por parte del participante del bot, y ejemplos específicos.

Ranuras numéricas

Utilice este tipo de ranura cuando desee que el bot considere sólo caracteres numéricos como parte de las secuencias extraídas. El bot no reconoce otros caracteres.

- No se aceptan entidades que superen el valor maxLength establecido. Por ejemplo, si el valor de la entidad es "123456" y la maxLength está establecida en 7 y el cliente dice "78", entonces como la nueva entidad extraída es "12345678" y la longitud es ahora 8, el bot trata la nueva entidad como un noMatch y mantiene el resto como "123456".

- Corrección de casos no explícitos o que se encuentran en la parte media de la entidad extraída. En los ejemplos siguientes, la entidad extraída previamente era 1299554464.

Ejemplos de correcciones de trabajo:- "No cambiar los dos últimos dígitos de 64 a 62"

- "Los dos últimos dígitos deben ser 62"

Ejemplo de correcciones que no funcionan:

-

- "No 62." El LLM no puede determinar qué modificar.

- "No, quería decir 62." El LLM no puede determinar qué modificar.

- "Cambia 55 por 44." Esta entrada es difícil de determinar para el LLM porque se encuentra en medio de la entidad.

- "La entidad debe empezar por 5". El LLM puede añadir un 5 al principio o puede corregir "1299554464" a "5299554464" como se espera.

-

- El LLM no tiene problemas con valores numéricos más grandes; sin embargo, hacerlo aumenta la posibilidad de una corrección más difícil. Los valores numéricos más grandes son más difíciles de corregir en el inicio o en la mitad del valor. Debido a esta limitación, Genesys recomienda el uso de la naturaleza multi-ranura, por lo que si se extrae un número de tarjeta de crédito, pedir los datos en trozos de 4 dígitos. Cualquier error se produce en las cuatro últimas cifras, lo que facilita su corrección.

Los casos que funcionan bien son:

- Extracción sencilla de dígitos de todas las longitudes. Por ejemplo: "El número de mi tarjeta de crédito es 0123456789012232".

- Extracción de dígitos en varios turnos con dígitos en formato léxico. Por ejemplo:

- Participante: "Mi tarjeta empieza por 0011" bot: "Tengo 0011 hasta ahora, por favor continúe."

- Participante: "Entonces 7831" bot: "Tengo 0011 7831 hasta ahora, por favor continúe."

- Participante: "Siete uno doble oh" bot: "Tengo 0011 7831 7100 hasta ahora, por favor continúe."

- Participante: "Por fin, 3333" bot: "Tengo 0011 7831 7100 3333 ¿es correcto?"

- Correcciones explícitas; por ejemplo, "Cambia las dos últimas cifras de 84 a 82".

- El LLM trata "Doble" como dos de lo que venga después; por ejemplo, doble 2 = 22. El ASR debería convertir esta respuesta a 22 en primer lugar. "Triple"/"Treble" son tres de ese dígito y "cuádruple" son cuatro.

- El LLM trata "Oh" como "0" en situaciones esperadas, no en situaciones inesperadas como "Oh perdón quería decir".

Ranuras para números de letras

Utilice este tipo de ranura para proporcionar pistas durante la extracción cuando los participantes utilicen alfabetos fonéticos; por ejemplo, el alfabeto fonético de la OTAN . Por ejemplo, un usuario puede decir "a de alfa" y el carácter extraído es "A".

- No se aceptan entidades que superen el valor maxLength establecido. Por ejemplo, si el valor de la entidad es "A12345", la maxLength se establece en 7 y el cliente dice "67", como la nueva entidad extraída es "A1234567" y la longitud es ahora 8, el bot trata la nueva entidad como noMatch y mantiene la entidad "A12345".

- Caracteres duplicados en varios turnos. Si en el turno 1 la entidad extraída es "AB78G" y en el turno siguiente el cliente empieza con otra "g", el LLM puede devolver erróneamente "AB78G" en lugar de "AB78GG".

- Correcciones ambiguas. Por ejemplo: "No, he dicho AZ". Una corrección ambigua puede ocurrir si, en el turno 1 el cliente dijo "A de manzana, C 72" que se extrajo como "AC72"; en el siguiente turno podría hacer una corrección difícil como "No, dije AZ".

- El LLM no tiene problemas con valores alfanuméricos más grandes; sin embargo, hacerlo aumenta la posibilidad de una corrección más difícil. Los valores numéricos más grandes son más difíciles de corregir en el inicio o en la mitad del valor. Debido a esta limitación, Genesys recomienda el uso de la naturaleza multi-ranura, por lo que si se extrae un número de pasaporte, pida los datos en trozos de 3 caracteres. Cualquier error se produce en los 3 últimos dígitos, lo que facilita su corrección.

Los casos que funcionan bien son:

- Extracción alfanumérica de todas las longitudes con ortografía fonética de letras, letras de enunciado simple y números. Por ejemplo: "Mi número de pasaporte es a de apple, b de beta, c de charlie, d 8909".

- Extracción alfanumérica en varios turnos con dígitos en formato léxico, por ejemplo:

- Participante: "Mi número de socio empieza por AB11" bot: "Tengo AB11 hasta ahora, por favor continúe."

- Participante: "Entonces c de charlie y z de zeta" bot: "Tengo AB11 CZ hasta ahora, por favor continúe".

- Participante: "beta alpha" bot: "Tengo AB11 CZ BA hasta ahora, por favor continúe".

- Participante: "Por fin, 99" bot: "Tengo AB11 CZ BA 99 ¿es correcto?"

- Correcciones explícitas. Por ejemplo, "No la última letra debería haber sido Z de zeta no c".

- El LLM trata "Doble" como dos de lo que venga después; por ejemplo, doble 2 = 22. El ASR debería convertir esta respuesta a 22 en primer lugar. "Triple"/"Treble" son tres de ese dígito y "cuádruple" son cuatro.

- El LLM trata "Oh" como "0" en situaciones esperadas, no en situaciones inesperadas como "Oh perdón quería decir".

Tragaperras de forma libre

Utiliza estas ranuras cuando quieras que el bot reconozca una descripción textual de la entidad a capturar. Por ejemplo, una dirección con el nombre de la calle, la ciudad y el código PIN.

- Direcciones

- Formato de dirección según las normas específicas de cada país. El participante en el bot debe basarse en la descripción que usted le proporcione para garantizar el formato adecuado.

- Carcasa: Por lo general, las mayúsculas y minúsculas son correctas; sin embargo, en ocasiones la entidad extraída puede aparecer en minúsculas o en mayúsculas.

- Correos electrónicos

- Identificación incorrecta de nombres de dominio personalizados en conversaciones multiturno. Las devoluciones más habituales y precisas se producen cuando los correos electrónicos se facilitan en un solo giro.

- Casos en los que las transcripciones ASR no convierten guiones, puntos y guiones bajos.

- Nombres

- Faltan caracteres o se reordenan cuando se escriben nombres largos.

La salida del modelo después de cada llamada contiene dos partes: la entidad extraída y un booleano, si la extracción se ha completado o no, en el que el estado de detección de la entidad es en curso o completado. Para la forma libre, el bot utiliza la descripción proporcionada.

- El modelo utiliza la descripción para juzgar si la entidad está capturada o si faltan partes mencionadas en la descripción. Lo ideal es que una descripción contenga de qué trata la entidad y qué otras subentidades o partes de una entidad debe contener.

- También es posible saltárselo. Si el cliente dice explícitamente algo parecido a: "Ya está, ya está, eso es todo, no lo tengo, no lo sé", etc., el estado de extracción cambia a completado y anula la recogida de subentidades basada en la descripción.

Ejemplos de formularios gratuitos: Casos en los que el bot puede juzgar correctamente el estado de detección de la entidad

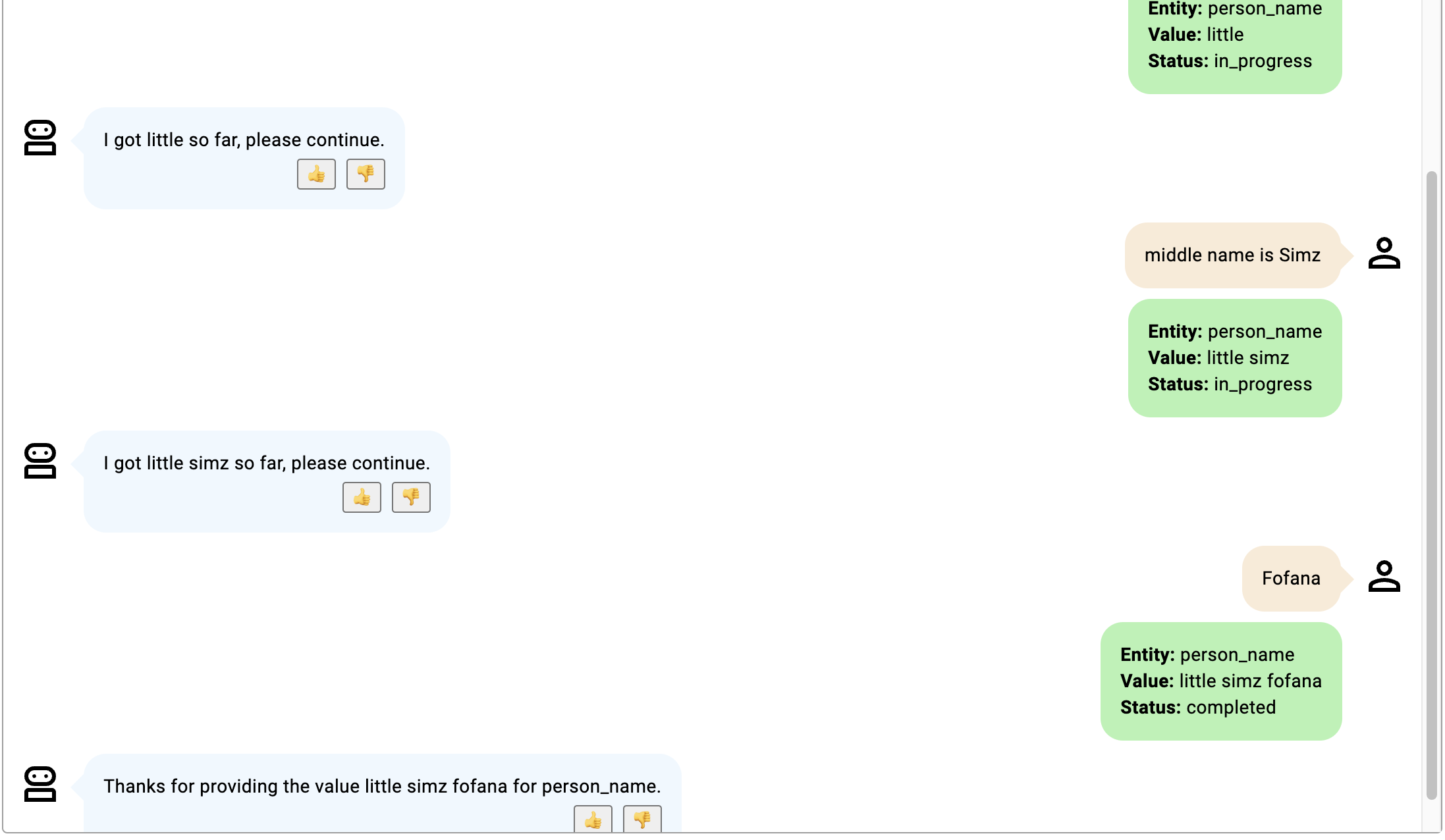

En estos ejemplos, la ranura es nombre_persona para describir el nombre y el apellido de una persona.

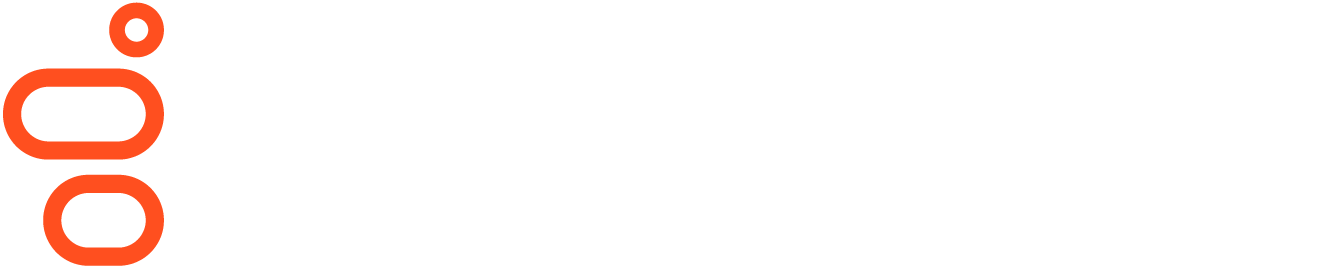

- El bot participante menciona específicamente qué parte de la entidad se proporciona.

Haga clic en la imagen para ampliarla.

- El participante del bot sólo menciona qué parte del nombre proporciona en el turno inicial; el modelo asume que la siguiente subentidad que proporciona el participante del bot es el apellido.

Haga clic en la imagen para ampliarla.

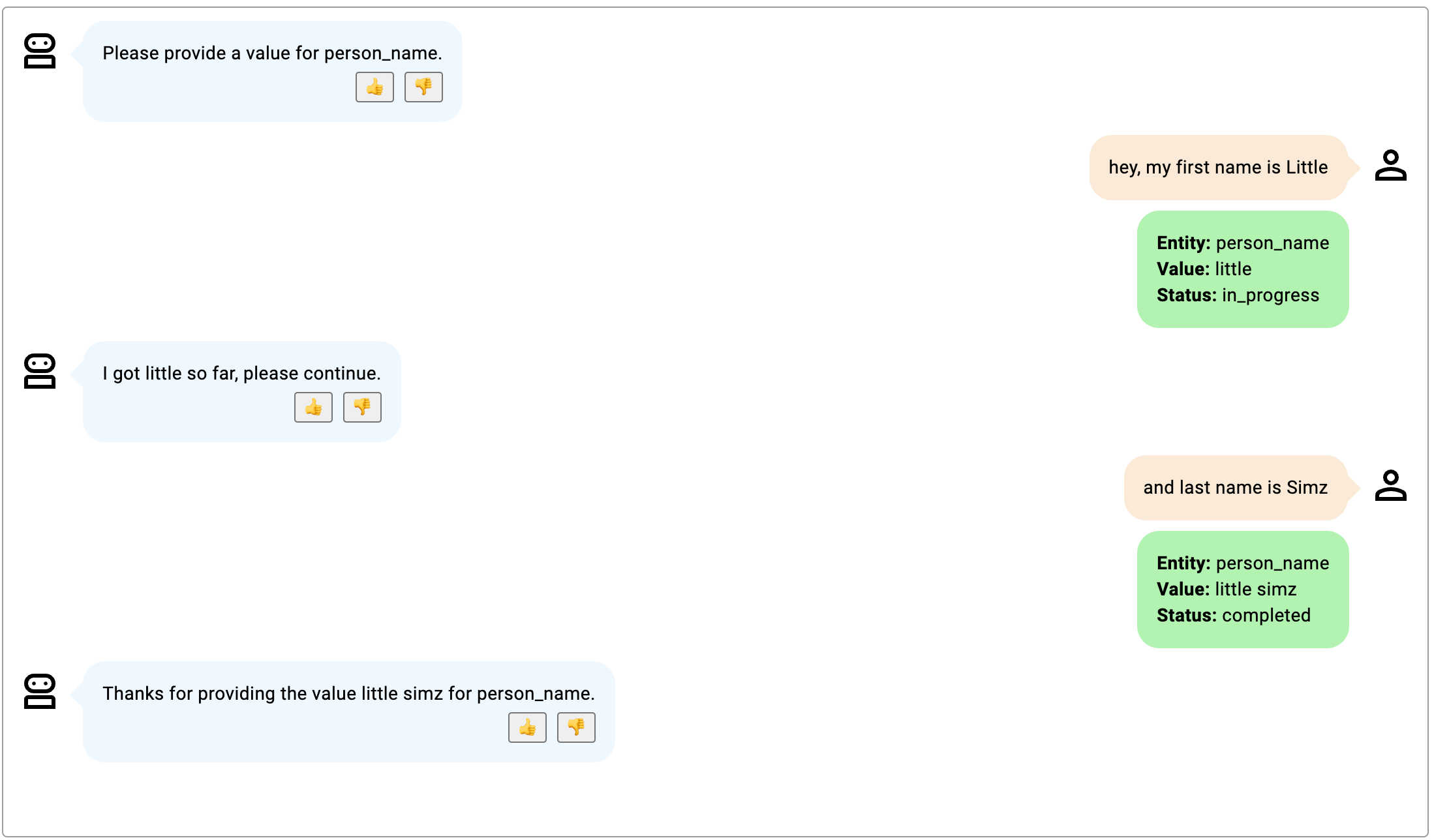

- Ambas subentidades se proporcionan a la vez y el estado cambia a completado después del primer turno.

Haga clic en la imagen para ampliarla.

- El estado permanece en progreso porque el participante en el bot especifica que la entidad que se proporciona es un segundo nombre, no mencionado en la descripción; se asume que la siguiente entidad que proporciona el participante en el bot es el apellido, como era de esperar.

Haga clic en la imagen para ampliarla.

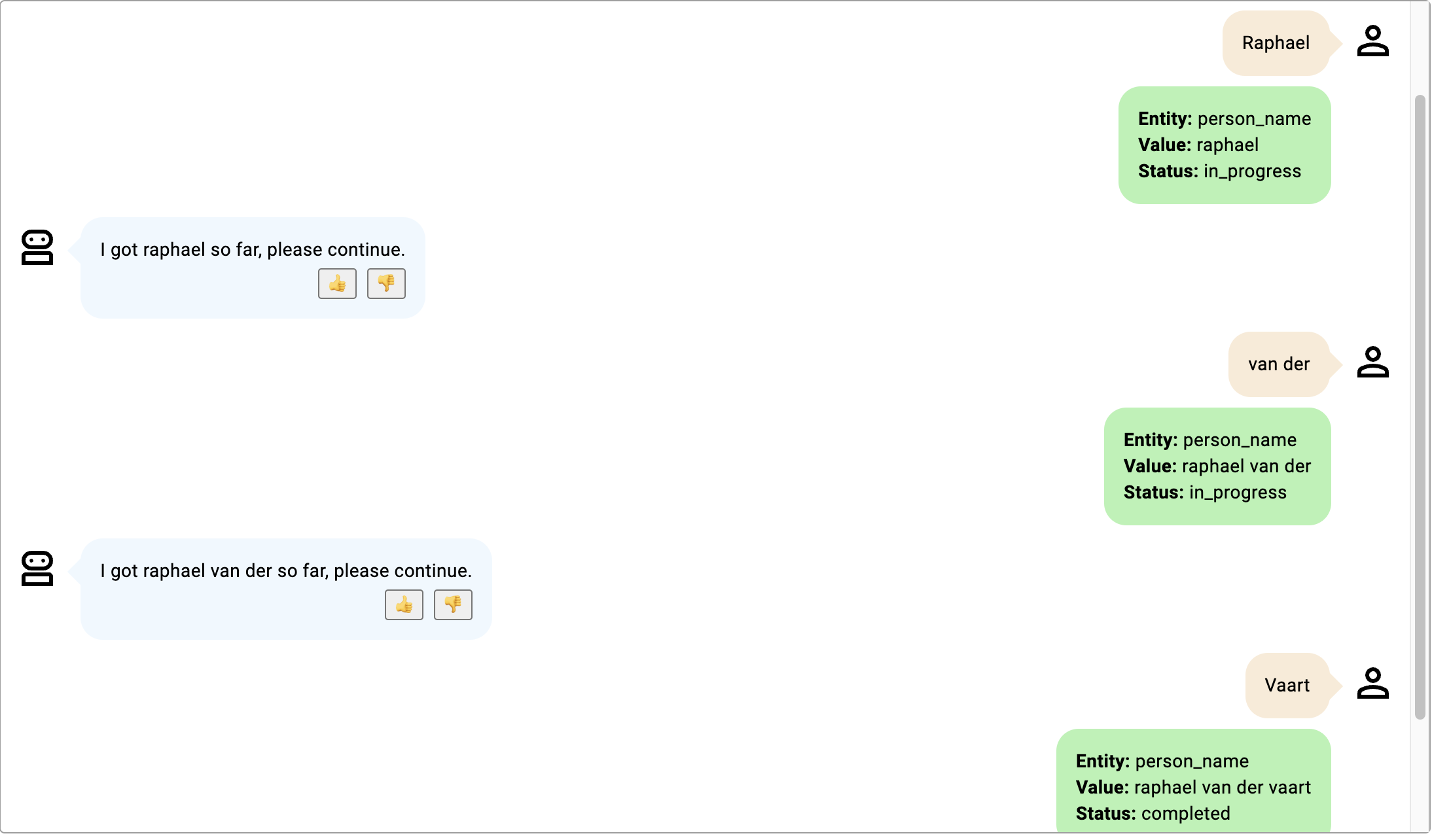

- Aunque el bot participante no lo especifica, y "van der" debería asumirse como un apellido, probablemente no lo sea, ya que "van der" es un prefijo de apellido de uso común y no un apellido real.

Haga clic en la imagen para ampliarla.

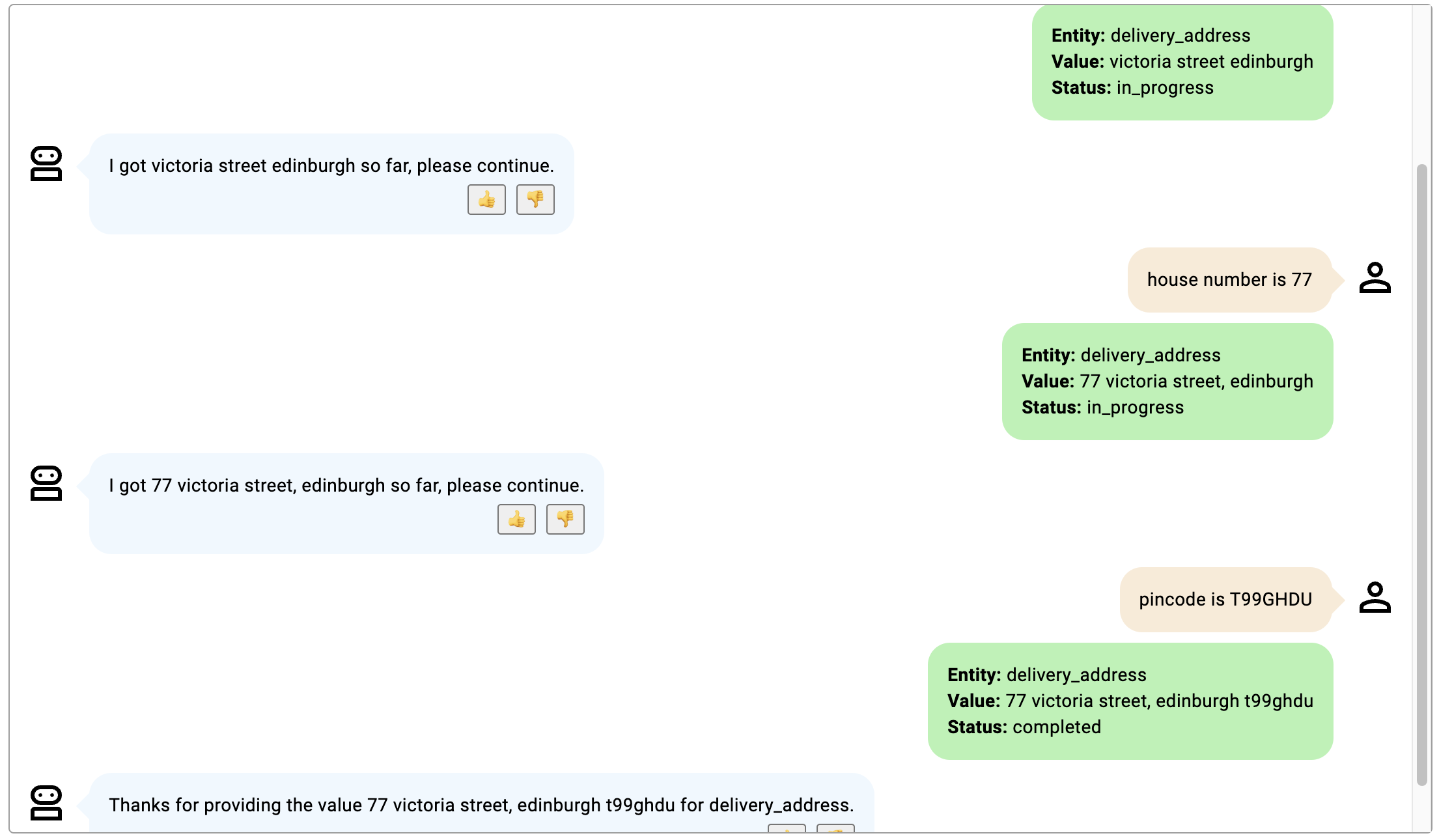

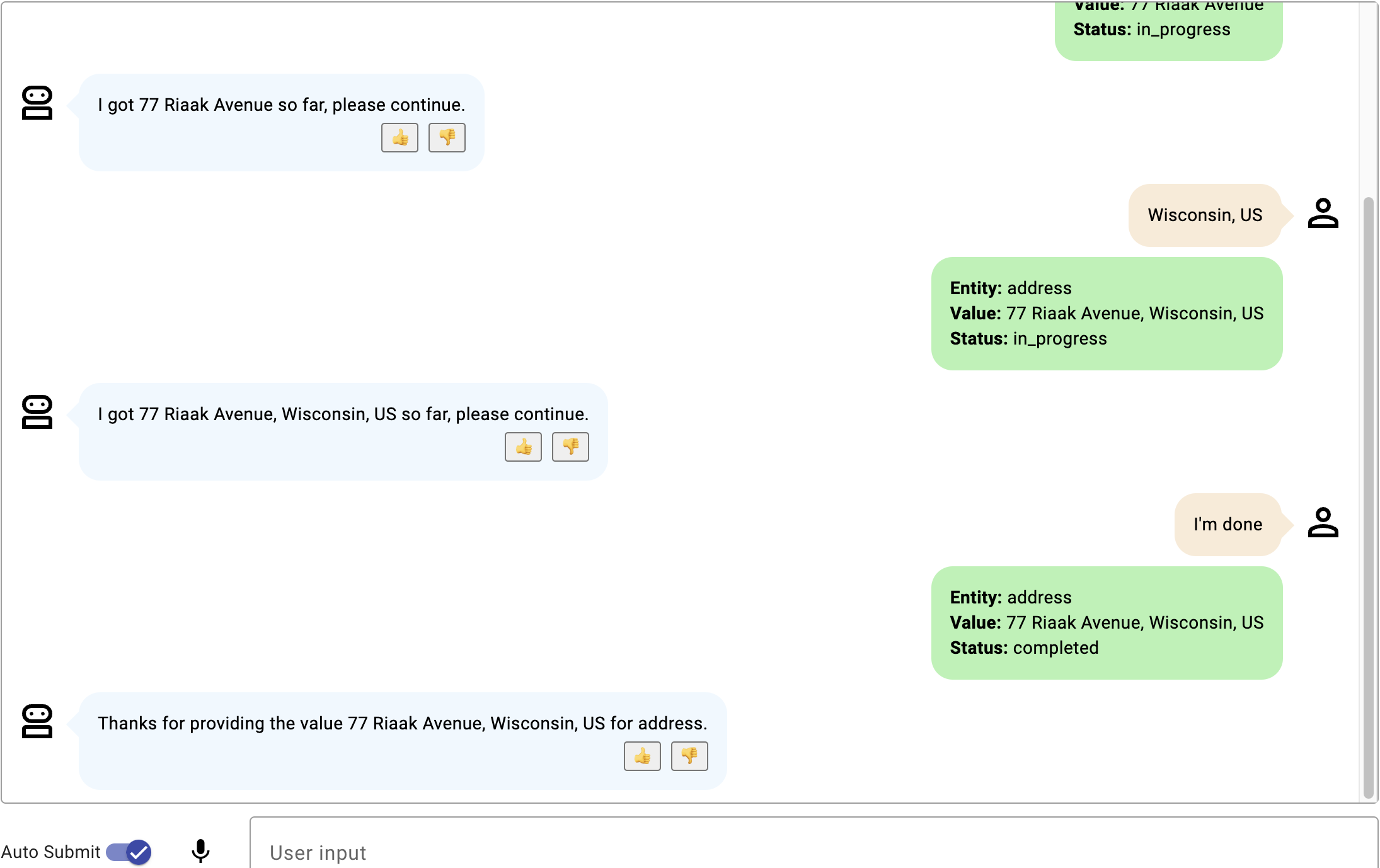

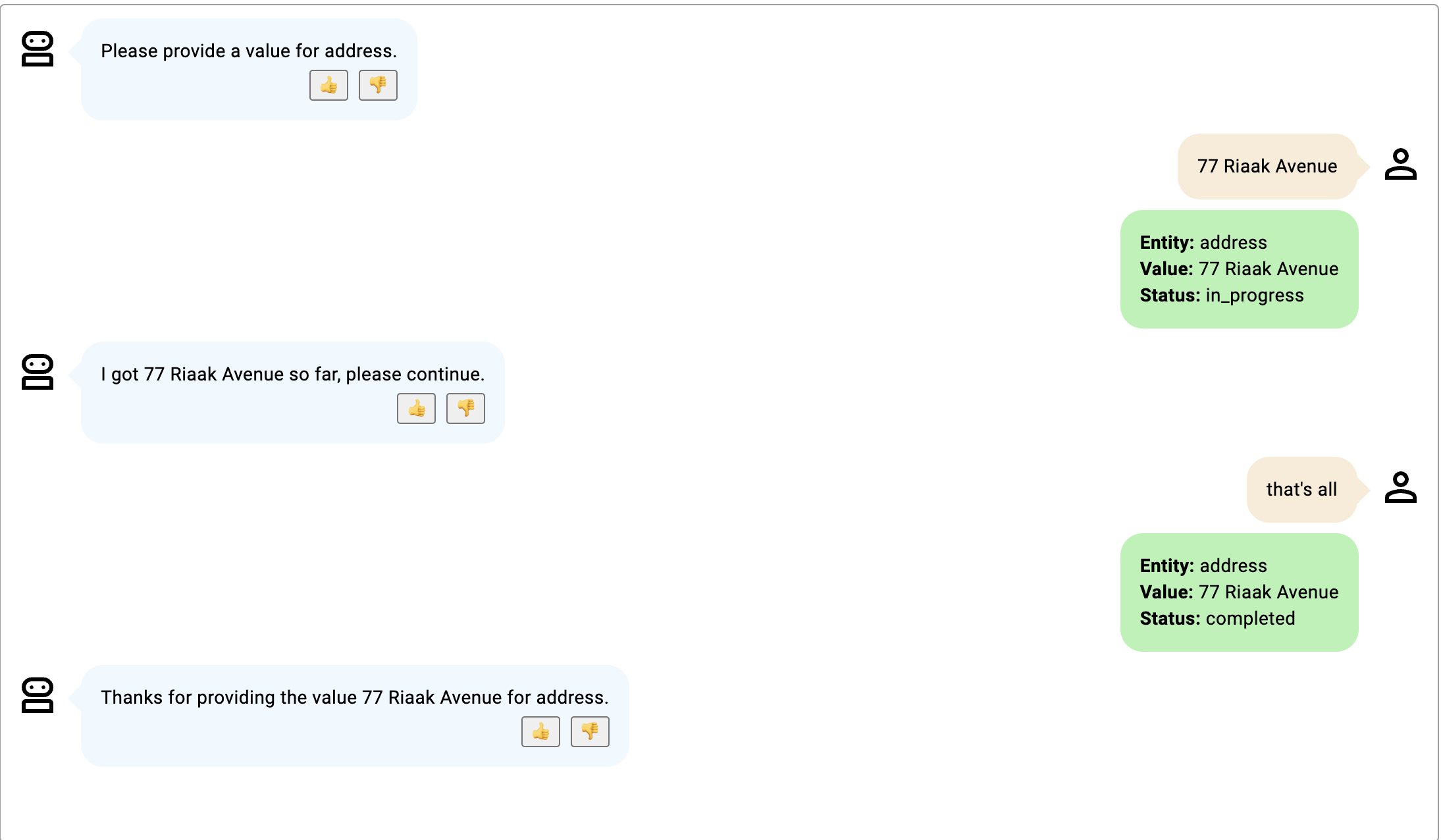

En estos ejemplos, la ranura es delivery_address para describir una dirección de entrega que incluye el número de casa y el código PIN.

- La conversación sigue en curso hasta que se facilitan tanto el número de la casa como el código PIN; añade el número de la casa al principio de la dirección y el código PIN al final.

Haga clic en la imagen para ampliarla.

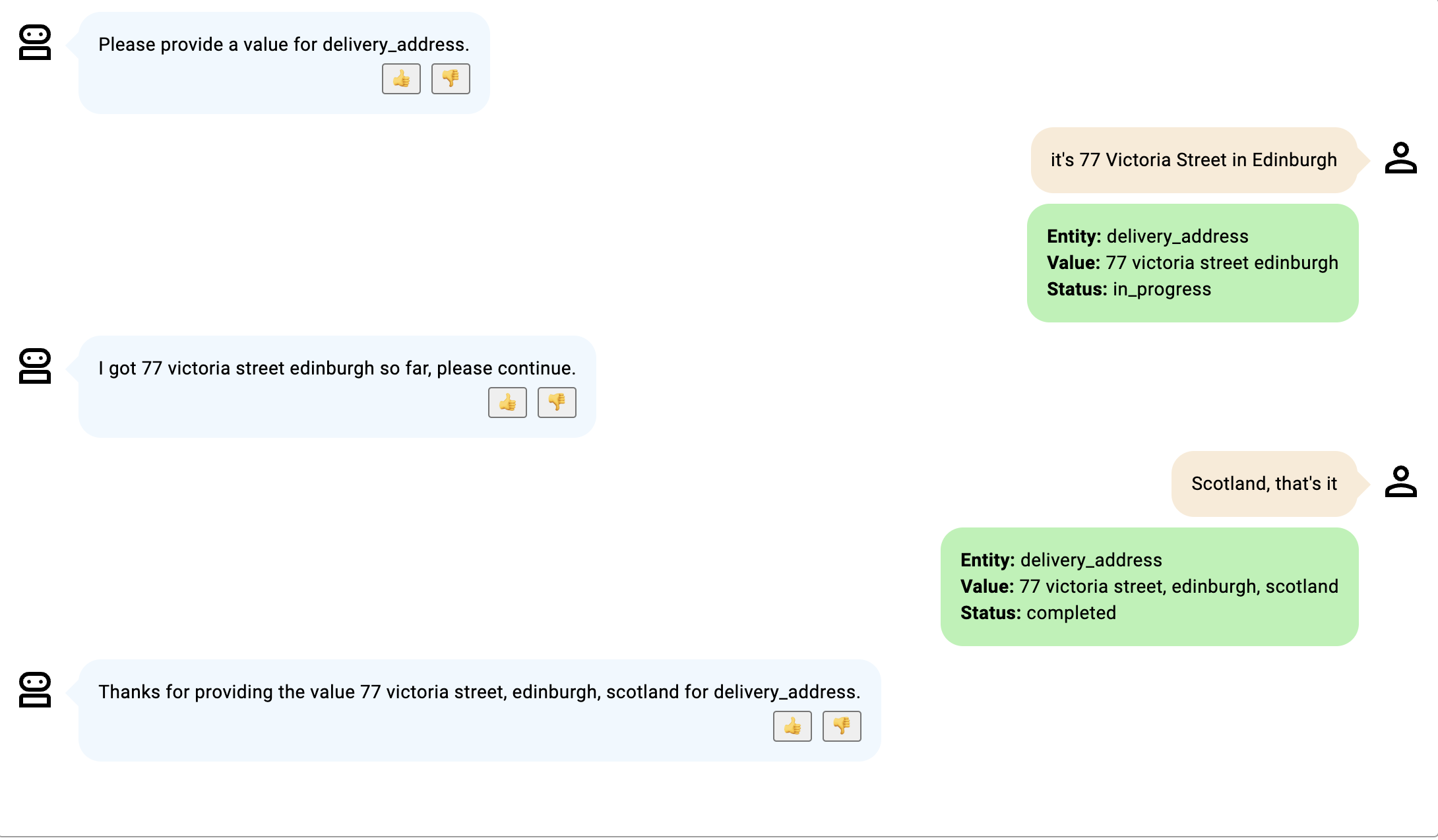

- Aunque no se proporcionó un código PIN, el estado cambia a completado porque el participante del bot indica que ha terminado. Este estado no se produce cuando el participante no dice "ya está" y el estado sólo pasa a completado cuando se proporciona un código PIN.

Haga clic en la imagen para ampliarla.

Ejemplos de formularios gratuitos: Comportamiento de salida anticipada

Estos ejemplos describen escenarios de comportamiento de salida anticipada para una ranura delivery_address que describe una dirección de entrega con un número de casa y un código PIN.

- Ejemplo 1 Ejemplo de salida anticipada

Haga clic en la imagen para ampliarla.

- Ejemplo 2 Ejemplo de salida anticipada.

Haga clic en la imagen para ampliarla.

- Ejemplo 3 Ejemplo de salida anticipada.

Haga clic en la imagen para ampliarla.

- Ejemplo 4 Ejemplo de salida anticipada.

Haga clic en la imagen para ampliarla.

Para obtener información sobre conversaciones de ejemplo que demuestran cómo funciona la captura de ranuras de forma libre, consulte Ejemplos de captura de ranuras de forma libre.

Consideraciones generales

- La calidad de la extracción de ranuras depende de la calidad de la transcripción de audio a texto en el canal de voz. Aquí se aplica el concepto de "basura que entra, basura que sale", ya que los errores de transcripción se propagan.

- El mensaje de aviso al cliente debe mencionar que la entidad puede proporcionarse en uno o varios turnos.